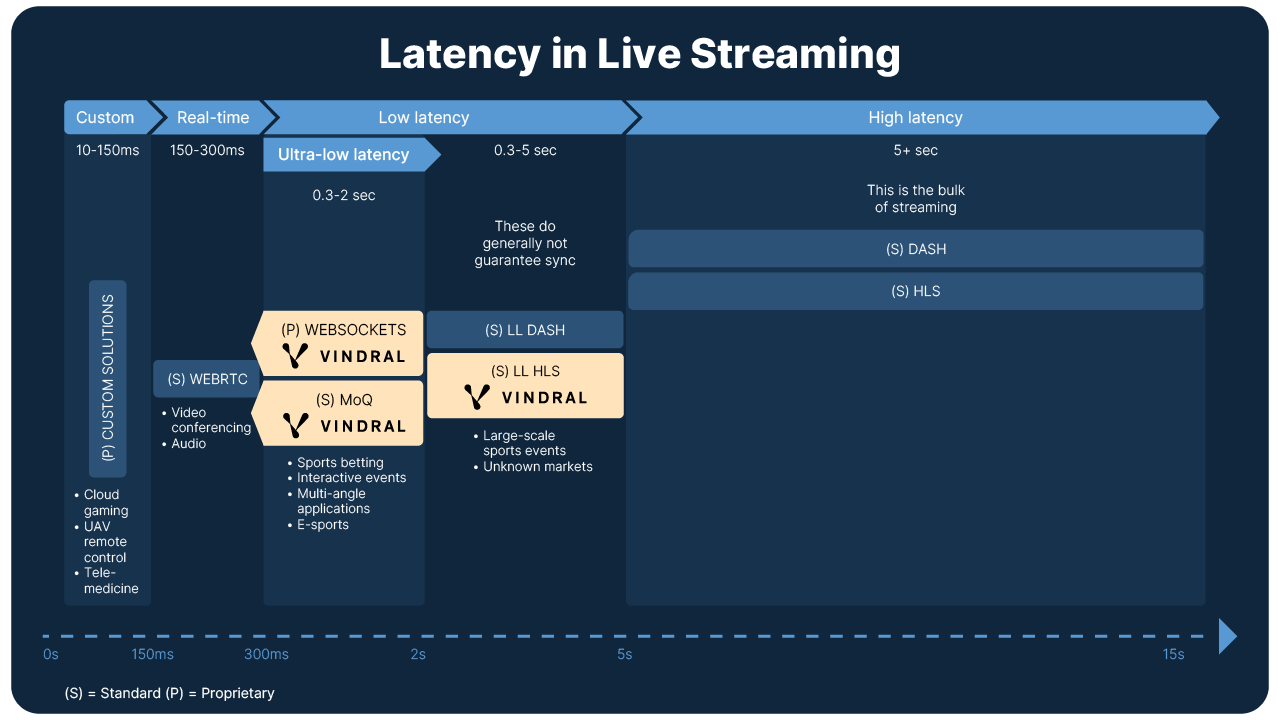

Dividing latency into subsections helps in isolating the needs and requirements for a given platform or subset of viewers. Vendors and industry voices have defined terms that aim to provide clarity and facilitate decision-making. To clarify and provide a higher level of detail, we have chosen to zoom in on each segment specifically and provide an outline of use cases, available technologies and implications for each segment. Do keep in mind that the technologies offering these different performance points also differ in terms of playback synchronization. Some allow viewers to drift, others not.

High latency (5+ seconds glass-to-glass)

The Internet is built for stability first and foremost. To maximize success in traffic flow and minimize lost information, file-based transfer protocols have built-in methods of creating resilience. This was adopted by early live streaming solutions, which matured into the HLS and DASH standards. While not synchronized, they provide robust methods of delivery and are the most widely used live streaming technologies. For most web television use cases, where latencies below 10 seconds are not a priority, choosing a high latency delivery method is a solid decision. Commonly trusted DRM methods and ad-insertion solutions are compatible with these high-latency solutions, which is relevant for many broadcasters.

Low latency (0.3s – 5 seconds glass-to-glass)

Please note that Low latency also includes the sub-category Ultra-low latency, which is outlined further below.

From the HLS and DASH standards, low-latency counterparts evolved. In recent years, latency has become more important for certain sports events, web-TV broadcasts and use-cases where slow interactivity is acceptable. Vindral LiveCloud adopted Low-Latency HLS (LL-HLS) as one possible delivery method in 2024 as an extension of its standard delivery. As a technology, it facilitates the use of DRM and ad-insertion, which are instrumental for the viability of certain business models within sports and entertainment. Also, the option to easily distribute HLS segments on third-party CDN:s makes these standards attractive for scenarios where large concurrent viewership crowds might gather – sometimes unexpectedly. Recommended for concurrencies of 2 million viewers and up, one drawback is the lack of synchronization unless a custom solution is built to keep viewers at the same point of the stream at all times.

Sub-category: Ultra-low latency (0.3s – 2 seconds)

Roughly around 2014, early mentions of ultra-low latency were starting to appear broadly. As Flash was a dying technology, different standards and custom solutions were proposed to make streaming at sub-second latency. One central challenge is to maintain stability of streams when the tolerances decrease. Early solutions built on web-socket technologies along with WebRTC implementations were created to meet this need; some scalable, others not.

The most modern approach is a standard called Media over QUIC (MoQ), which Vindral LiveCloud has adopted as its primary method of delivery. With ultra-low latency as one main advantage, viewer synchronization is an equally important promise. Also, MoQ has opened up a path for Vindral LiveCloud to support ad-insertion and standardized DRM. Performance on challenging networks – from mobile Internet to home wifi – is unmatched which is a high priority since it allows for high video quality to be maintained.

Media over QUIC is suitable for live sports, both with betting and without, live events, e-sports, auctions, public safety applications, iGaming (live casino) and other scenarios where interactivity without lag is required. Also, the growing number of multi-angle experiences require synchronization in order for angle switching to be smooth and coherent.

If you are looking to build an application or live streaming service using Media over QUIC, do not hesitate to contact us. Vindral LiveCloud exists both as a cloud SaaS and OEM solution to white-label, and any hybrid in-between.

“Real-time” (150 – 300 ms)

Though there is a category at lower latency than this, “real-time” has become adopted as a term from the industry for a certain type of solution. 2-way human voice communications are only viable at 200ms or lower latency. For this, WebRTC was developed as a method for video conferencing. While sensitive to network conditions and quickly degrading video quality, it is an unmatched solution for 2-way video calls where voice and a natural conversation pace are the most important aspects. Real-time technologies were considered a solution for ultra-low latency early on but have struggled to gain traction among vendors because of the known quality degradation issues. Note: In particular cases such as local streaming for sports venues or in private CDN:s, Vindral Live’s Web Socket and MoQ solutions can be tuned to perform in this “real-time” category.

Below real-time (15 – 150 ms)

For lack of a better word, there is a specific category of use cases for live streaming that requires considerably higher performance than technologies implicated by the industry term “real-time”. For exotic use cases such as telemedicine or cloud gaming, ideally the user is kept below 50ms of latency, while latencies above 200ms are unacceptable (paper). Cloud gaming is often mistaken for iGaming or e-sports, which are different use-cases altogether. While iGaming is in essence gambling and e-sports is professional or amateur competition in games, cloud gaming is the offering of remote play on consoles or computers in the cloud. The user controls a game that is rendered elsewhere, which requires extremely low latency to be viable. Solutions for this type of application are most often custom-built.

If you’re interested in learning more about latency and how it impacts live streaming solutions, feel free to reach out to us at Vindral. We’re here to help you navigate the different options and find the best fit for your platform’s requirements.