Have you ever watched a live stream only to realize you’re seconds, sometimes even tens of seconds, behind the actual action? In traditional live streaming, delays of 30–40 seconds are common, which can completely change the experience. Imagine participating in a live auction, only to have your bid arrive too late due to lag. Or watching a crucial sports match and hearing your neighbors cheer for a goal before you even see it happen. In esports, where reaction times are measured in milliseconds, a slight delay can mean the difference between victory and defeat. Live betting also depends on real-time accuracy. If your stream is behind, so is your decision-making. Even in interactive events, audience engagement relies on instant responses to maintain immersion. Ultra-low latency streaming minimizes these delays, often reducing them to sub-second levels. But it’s not just about speed—true low-latency streaming should also ensure that all viewers remain in perfect sync and experience high-quality video without buffering or degradation.. This combination is key to delivering seamless real-time experiences, whether it’s live sports, online gaming, remote production, or interactive broadcasts. Let’s dive into what low latency streaming is, how it works, and why it’s the foundation for next-generation live content.

What is low latency streaming?

The basics of latency

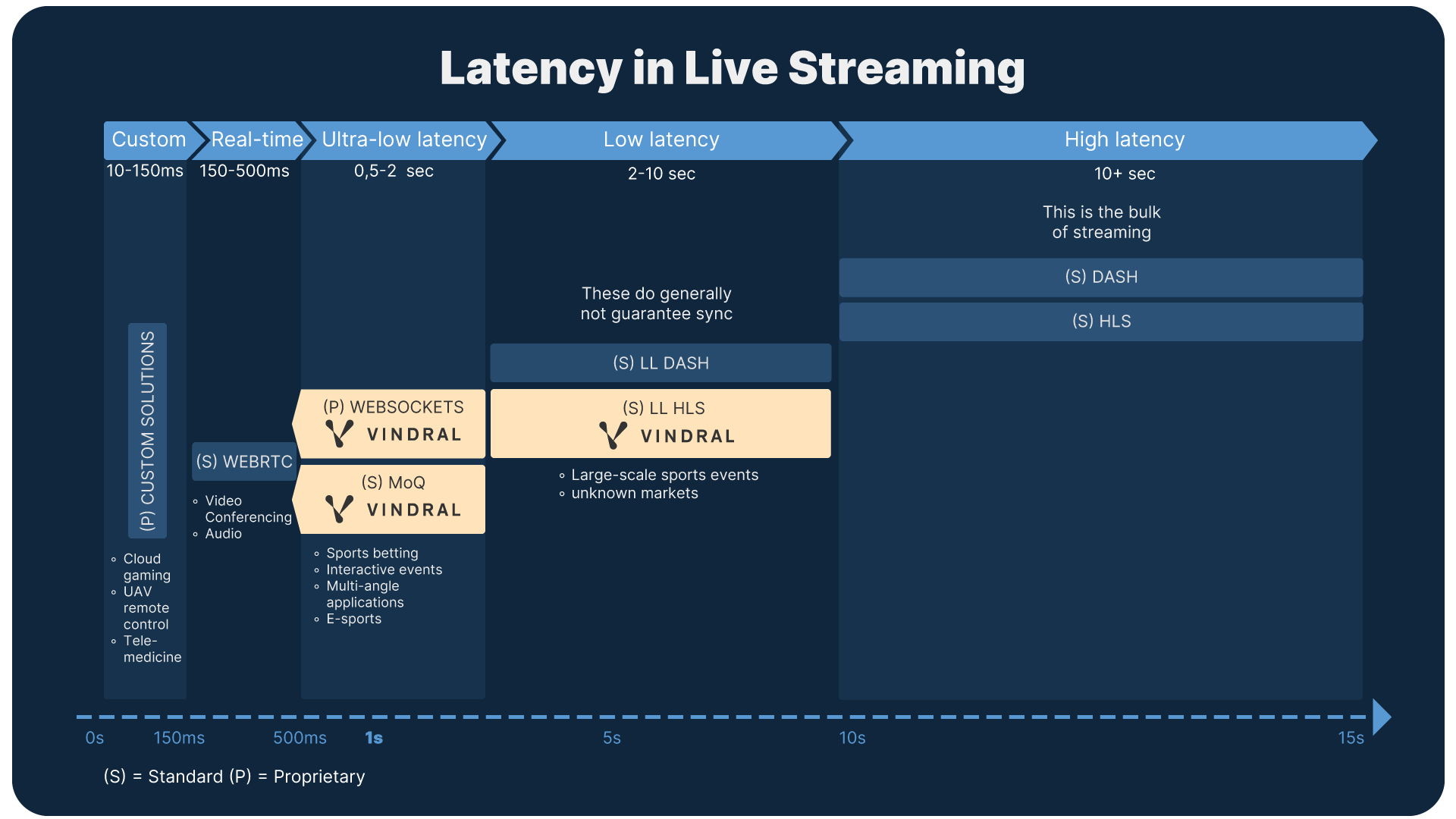

Latency is the delay between the time data is sent and received. In the context of live streaming, this means the time it takes for a live event to be encoded, transmitted, and displayed to the viewer. In traditional streaming setups, this delay can range from 10 to 30 seconds. Low latency streaming reduces this to just a few seconds, or even fractions of a second, almost making it feel like you’re experiencing the event as it happens.

Why timing matters

Not all content requires low latency. For on-demand movies or pre-recorded videos, a few extra seconds won’t impact the viewing experience. But for live scenarios like live casino, sports, or interactive events, even a short delay can reduce engagement. Imagine trying to make a live sports bet when your stream lags behind the actual event. This is one of the problems that low latency streaming solves.

How does low latency streaming work?

It’s not magic; This is achieved through a combination of efficient data encoding, optimized delivery methods, and live playback protocols. Here’s an overview of some of the relevant technologies operating at different stages of this workflow.

Streaming protocols

- SRT (Secure Reliable Transport): an open-source transport protocol designed to optimize low-latency, high-quality video streaming over unreliable networks, such as the public internet. It ensures secure and reliable transmission by minimizing packet loss, jitter, and latency, making it a strong alternative to traditional protocols like RTMP and RTP.

- WebSockets: A full-duplex protocol that enables persistent, bidirectional communication between clients and servers. While not primarily a media streaming protocol, WebSockets excel in delivering near real-time data, and is useful for synchronized events around a primary video or audio stream. Its low-latency nature and continuous connection model reduce overhead compared to traditional request-response methods, allowing for more responsive and engaging live experiences.

- LL-HLS (Low-Latency HTTP Live Streaming): An extension of Apple’s HTTP Live Streaming protocol focused on minimizing end-to-end delay. Traditionally, HLS has relied on segment-based delivery that can introduce significant latency (often many seconds). LL-HLS tackles this by breaking media segments into smaller parts—sometimes called “chunks” or “partial segments”—allowing the player to begin playback almost immediately. This approach significantly reduces the glass-to-glass delay, making it more suitable for interactive or time-sensitive applications while retaining HLS’s compatibility and broad ecosystem support.

- CMAF (Common Media Application Format): A media streaming format designed to unify and optimize video delivery across different platforms. It was introduced to reduce fragmentation in the streaming industry by enabling a single media format to be used across both HLS (Apple’s HTTP Live Streaming) and DASH (MPEG-DASH).

- WebRTC (Web Real-Time Communication): A technology that enables real-time audio, video, and data communication directly between web browsers and applications without requiring additional plugins or external software. It operates using peer-to-peer (P2P) connections, reducing latency and improving efficiency for live interactions.

- MoQ (Media over QUIC): MoQ (Media over QUIC) is an emerging transport protocol designed to improve live media streaming by leveraging QUIC, a modern internet transport protocol developed by Google and currently in the process of being defined as a standard by the IETF. MoQ aims to enhance scalability, reduce latency, and improve reliability for real-time media delivery, including live streaming and interactive applications.

The role of CDNs

Content Delivery Networks (CDNs) play a big role in low latency streaming. They work by caching and delivering content from servers located closer to viewers, reducing the physical distance data has to travel. This helps speed up delivery while keeping streams stable, even during peak traffic.

Why low latency streaming is important

1. Powering real-time interaction In live applications like gaming, auctions, and virtual events, low latency is key for instant responses. It enables players to react quickly, bidders to place offers at the right moment, and audiences to engage in instantly without delays.

2. Enhancing the viewing experience Even viewers who aren’t actively interacting with the broadcast—those who are simply watching—can become bored or feel disconnected when there’s a significant delay. Keeping latency low ensures they remain engaged and feel connected to the live action, even if they’re just passive observers.

3. Maintaining competitive fairness In contexts like sports betting or gaming tournaments, fairness hinges on everyone seeing the action at the same time. Low latency streaming ensures no one gets an unfair advantage due to delays.

Industries benefiting from low latency streaming

Live Sports & Esports

Low-latency reduces the risk of spoilers from social media or betting platforms and supports interactive elements such as overlays and instant replays.

iGaming & Online Betting

In online gambling and sports wagering you need video feeds, betting odds, and user actions aligned to keep a level playing field and an engaging atmosphere. This alignment promotes confidence in the platform.

Live Auctions & Bidding Platforms

Live auctions need timely updates to ensure fair bidding. An unreliable stream can cause participants to miss opportunities, so low-latency helps avoid misunderstandings and keeps transactions transparent for both buyers and sellers.

Remote Production & Broadcast

Remote production (also known as REMI—Remote Integration Model) allows broadcasters and media teams to produce live events from a central control room while capturing footage from multiple remote locations.

Delays in video transmission can make it difficult for directors, producers, and technical operators to coordinate shots, switch between feeds, and manage audio in real time. Even the smallest delays can lead to out-of-sync visuals and audio, impacting the overall quality of a broadcast.

Other Use Cases

It is also helpful in video conferencing (where delays disrupt conversations), public safety (where timely video can guide response efforts), telemedicine (where doctors rely on live feeds for remote consultations), and cloud gaming or extended reality (where prompt responsiveness greatly improves user experience) and much more.

Industry trends in low latency streaming

MoQ (Media over QUIC)

MoQ is an emerging protocol designed to improve low-latency streaming by leveraging QUIC, a transport protocol that offers faster connection setup, reduced packet loss, and better congestion control. MoQ enables more efficient live streaming, with benefits like multiplexed media streams, improved caching, and lower latency compared to traditional HTTP-based streaming methods. As it matures, MoQ is expected to play a significant role in live sports, gaming, and large-scale event streaming.

Edge computing

To reduce reliance on centralized servers, edge computing and peer-to-peer (P2P) streaming are being explored as ways to deliver content faster. By caching and processing media closer to the user, edge computing can minimize delay, while P2P approaches reduce strain on content delivery networks (CDNs) by allowing users to share stream segments directly. This hybrid approach is particularly useful for large-scale live events with high concurrent viewership.

Evolving Mobile Networks (5G/6G)

As mobile networks continue to advance—from wider 5G rollouts to the emerging 6G technologies—streaming services can capitalize on increased bandwidth, lower network congestion, and improved reliability. These benefits are particularly important for mobile live streaming, remote production, and interactive experiences, where a stable, high-speed connection is essential. As each new generation of wireless networks expands, we can expect even lower latency and more immersive streaming experiences on the go.

Challenges in achieving low latency streaming

While low latency streaming has clear benefits, implementing it comes with its own set of challenges:

- Network congestion: One of the biggest hurdles is network congestion. High traffic levels, especially during large-scale live events, can overwhelm servers and reduce streaming performance. Limited bandwidth further complicates this issue, particularly in regions with underdeveloped network infrastructure.

- Device compatibility: Not all devices and platforms support protocols like WebRTC, SRT, or formats like CMAF. Ensuring that streams are accessible across multiple devices and browsers while maintaining low latency can be challenging.

- Bandwidth costs: Low latency often requires higher bandwidth than traditional streaming methods. Delivering real-time content, especially high-quality video, needs efficient data transmission, which increases overall bandwidth usage. This can result in significant operational costs, especially for large-scale events or platforms with a growing audience.

How to implement low latency streaming

Now you’ve read about all the benefits, how do you go about implementing it? The approach depends on your use case, network conditions, and technical setup, but here are some key steps to help you achieve minimal delay while maintaining quality and reliability.

- Choose the right protocols: Select a protocol that suits your use case, whether it’s Websockets for interactivity or LL-HLS for HTTP-based streaming.

- Use a reliable CDN: A CDN ensures content is delivered quickly by caching data on edge servers closer to your audience. This minimizes the physical distance data must travel, reducing latency and ensuring consistent performance. Choose a CDN provider with a strong global network for better scalability.

- Optimize video encoding: Efficient encoding is critical for balancing low latency and high-quality video. Use modern codecs like H.264, H.265, or AV1 to reduce video file sizes without sacrificing quality. Leverage hardware-accelerated encoding to speed up the processing of video streams.

- Implement adaptive bitrate streaming: It ensures that video quality adjusts dynamically based on the viewer’s network conditions. This prevents buffering and maintains smooth playback, even in areas with inconsistent bandwidth.

- Test and monitor performance to identify and resolve potential bottlenecks: Use analytics tools to monitor latency, viewer engagement, and error rates. Simulate high-traffic conditions to ensure your infrastructure can handle peak loads without compromising latency.

If you’d like to learn more about low latency streaming solutions and how they can elevate your live content delivery, Vindral can help. Contact us to learn more.

FAQs

1. What is low latency streaming?

Low latency streaming minimizes the delay between an event and its display to viewers, enabling near real-time delivery.

2. How does MoQ improve low latency streaming?

MoQ uses the QUIC protocol to reduce connection setup times and optimize data flow, making it effective for real-time applications.

3. Which industries benefit from low latency streaming?

Industries like gaming, sports broadcasting, virtual events, and financial services rely on low latency for seamless experiences.

4. How can I implement low latency streaming?

Start with protocols like Websockets or LL-HLS, integrate a reliable CDN, and optimize settings for adaptive bitrate streaming.

5. Why is low latency important for live sports?

Low latency ensures fans can watch events in sync with real-time action, maintaining engagement and fairness.